Anatomy of an AI Art Generator App — how some smart technology choices helped me build and launch NightCafe Creator in under a month

Last year I started working on an AI Art Generator app called NightCafe Creator. I recently wrote an article about how I conceived and then validated the concept. This article follows on from that one, and outlines the technology stack that I used to build it, and how my choices helped me build and launch the app in under a month.

First, a brief timeline

October 14, 2019 — Looking back at my commit history, this is the day I switched focus from validating the idea of selling AI-generated artworks, to actually building the app.

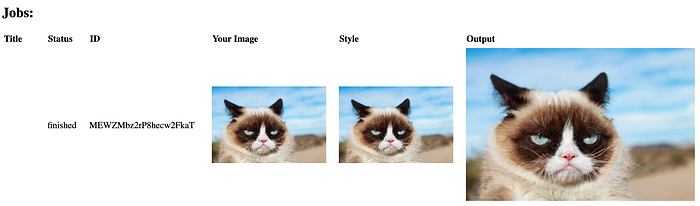

October 28 — 2 weeks later I sent a Slack message to some friends showing them my progress, a completely un-styled, zero polish “app” (web page) that allowed them to upload an image, upload a style, queue a style-transfer job and view the result.

October 30 — I sent another Slack message saying “It looks a lot better now” (I’d added styles and a bit of polish).

November 13 — I posted it to Reddit for the first time. Launched.

Requirements

A lot of functionality is required for an app like this:

- GPUs in the cloud to queue and run jobs on

- An API to create jobs on the GPUs

- A way for the client to be alerted of finished jobs and display them (E.g. websockets or polling)

- A database of style transfer jobs

- Authentication and user accounts so you can see your own creations

- Email and/or native notifications to alert the user that their job is finished (jobs run for 5+ minutes so the user has usually moved on)

- And of course all the usual things like UI, a way to deploy, etc

How did I achieve all this in under a month? It’s not that I’m a crazy-fast coder — I don’t even know Python, the language that the neural style transfer algorithm is built in — I put it down to a few guiding principles that led to some smart choices (and a few flukes).

Guiding Principles

- No premature optimisation

- Choose the technologies that will be fastest to work with

- Build once for as many platforms as possible

- Play to my own strengths

- Absolute MVP (Minimum Viable Product) — do the bare minimum to get each feature ready for launch as soon as possible

The reasoning behind the first four principles can be summarised by the last one. The last principle — Absolute MVP — is derived from the lean startup principle of getting feedback as early as possible. It’s important to get feedback ASAP so you can learn whether you’re on the right track, you don’t waste time building the wrong features (features nobody wants), and you can start measuring your impact. I’ve also found it important for side-projects in particular, because they are so often abandoned before being released, but long after an MVP launch could have been done.

Now that the stage has been set, let’s dive into what these “smart technology choices” were.

Challenge #1 — Queueing and running jobs on cloud GPUs

I’m primarily a front-end engineer, so this is the challenge that worried me the most, and so it’s the one that I tackled first. The direction that a more experienced devops engineer would likely have taken is to set up a server (or multiple) with a GPU on an Amazon EC2 or Google Compute Engine instance and write an API and queueing system for it. I could foresee a few problems with this approach:

- Being a front-end engineer, it would take me a long time to do all this

- I could still only run one job at a time (unless I set up auto-scaling and load balancing, which I know even less about)

- I don’t know enough devops to be confident in maintaining it

What I wanted instead was to have this all abstracted away for me — I wanted something like AWS Lambda (i.e. serverless functions) but with GPUs. Neither Google nor AWS provide such a service (at least at the time of writing), but with a bit of Googling I did find some options. I settled on a platform called Algorithmia. Here’s a quote from their home page:

Data scientists never have to worry about infrastructure again

Perfect! Algorithmia abstracts away the infrastructure, queueing, autoscaling, devops and API layer, leaving me to simply port the algorithm to the platform and be done! (I haven’t touched on it here, but I was simply using an open-source style-transfer implementation in tensorflow). Not really knowing Python, it still took me a while, but I estimate that I saved weeks or even months by offloading the hard parts to Algorithmia.

Challenge #2 — The UI

This is me. This is my jam. The UI was an easy choice, I just had to play to my strengths, so going with React was a no-brainer. I used Create-React-App initially because it’s the fastest way to get off the ground.

However, I also decided — against my guiding principles — to use TypeScript for the first time. The reason I made this choice was simply that I’d been noticing TypeScript show up in more and more job descriptions, blog posts and JS libraries, and realised I needed to learn it some time — why not right now? Adding TypeScript definitely slowed me down at times, and even at the time of launch — a month later — it was still slowing me down. Now though, a few months later, I’m glad I made this choice — not for speed and MVP reasons but purely for personal development. I now feel a bit less safe when working with plain JavaScript.

Challenge #3 — A database of style-transfer jobs

I’m much better with databases than with devops, but as a front-end engineer, they’re still not really my specialty. Similar to my search for a cloud GPU solution, I knew I needed an option that abstracts away the hard parts (setup, hosting, devops, etc). I also thought that the data was fairly well suited to NoSQL (jobs could just live under users). I’d used DynamoDB before, but even that had its issues (like an overly verbose API). I’d heard a lot about Firebase but never actually used it, so I watched a few videos. I was surprised to learn that not only was Firebase a good database option, it also had services like simple authentication, cloud functions (much like AWS Lambda), static site hosting, file storage, analytics and more. As it says on the Firebase website, firebase is:

A comprehensive app development platform

There were also plenty of React libraries and integration examples, which made the choice easy. I decided to go with Firebase for the database (Firestore more specifically), and also make use of the other services where necessary. It was super easy to setup — all through a GUI — and I had a database running in no time.

Challenge #4 — Alerting the client when a job is complete

This also sounded like a fairly difficult problem. A couple of traditional options that might have come to mind were:

- Polling the jobs database to look for a “completed” status

- Keeping a websocket open to the Algorithmia layer (this seemed like it would be very difficult)

I didn’t have to think about this one too much, because I realised — after choosing Firestore for the database — that the problem was solved. Firestore is a realtime database that keeps a websocket open to the database server and pushes updates straight into your app. All I had to do was write to Firestore from my Algorithmia function when the job was finished, and the rest was handled automagically. What a win! This one was a bit of a fluke, but now that I’ve realised it’s power I’ll definitely keep this little trick in my repertoire.

Challenge #5 — Authentication, Notifications and Deployment

These also came as a bit of a fluke through my discovery of Firebase. Firebase makes authentication easy (especially with the readily available React libraries), and also has static site hosting (perfect for a Create-React-App build) and a notifications API. Without Firebase, rolling my own authentication would have taken at least a week using something like Passport.js, or a bit less with Auth0. With Firebase it took less than a day.

Native notifications would have taken me even longer — in fact I wouldn’t have even thought about including native notifications in the MVP release if it hadn’t been for Firebase. It took longer than a day to get notifications working — they’re a bit of a complex beast — but still dramatically less time than rolling my own solution.

For email notifications I created a Firebase function that listens to database updates — something Firebase functions can do out-of-the-box. If the update corresponds to a job being completed, I just use the SendGrid API to email the user.

Creating an email template is always a pain, but I found the BEE Free HTML email creator and used it to export a template and convert it into a SendGrid Transactional Email Template (the BEE Free template creator is miles better than SendGrid’s).

Finally, Firebase static site hosting made deployment a breeze. I could deploy from the command line via the Firebase CLI using a command as simple as

npm run build && firebase deployWhich of course I turned into an even simpler script

npm run deployA few things I learned

The speed and success of this project really reinforced my belief in the guiding principles I followed. By doing each thing in the fastest, easiest way I was able to build and release a complex project in under a month. By releasing so soon I was able to get plenty of user feedback and adjust my roadmap accordingly. I’ve even made a few sales!

Another thing I learned is that Firebase is awesome. I’ll definitely be using it for future side-projects (though I hope that NightCafe Creator is successful enough to remain my only side-project for a while).

Things I’ve changed or added since launching

Of course, doing everything the easiest/fastest way means you might need to replace a few pieces down the track. That’s expected, and it’s fine. It is important to consider how hard a piece might be to replace later — and the likelihood that it will become necessary — while making your decisions.

One big thing I’ve changed since launching is swapping the front-end from Create React App to Next.js, and hosting to Zeit Now. I knew that Create React App is not well suited to server-side rendering for SEO, but I’d been thinking I could just build a static home page for search engines. I later realised that server-side rendering was going to be important for getting link previews when sharing to Facebook and other apps that use Open Graph tags. I honestly hadn’t considered the Open Graph aspect of SEO before choosing CRA, and Next.js would have probably been a better choice from the start. Oh well, live and learn!

Please try my app

I am ending this post with a plea — please try my app! NightCafe Creator is a Progressive Web App, so you just need to click the link on any device to use it. I’d love to see what kind of interesting art you can generate. Post one of your creations in the comments!

Also keep in mind that what you see now is NOT what was launched on November 13. I’ve been constantly improving the app for the last 3 months since launch (based on valuable user feedback of course), and it’s a lot more polished now than it was on launch day.

Of course if you’ve got any feedback on the app, please reach out to me at nightcafestudio at gmail dot com. If you’ve got feedback or comments on this article, post them below along with your creations.

If you want to stay up to date with my journey, and news about NightCafe, please subscribe to the newsletter and follow me on Medium, Twitter and Reddit.